EN PT/BR

Journalistic mediation × algorithmic mediation

Platforms are increasingly present in our routine. But can we understand and visualize the various implications of the insertion of these agents and their logic of operation in the various social sectors?

One of the sectors that has been largely influenced by the platforms’ logic is the press. In this present study, I propose thinking specifically about the implications of the insertion of algorithmic mediation, which is the operational basis of digital media platforms, in journalistic mediation. For that, I present two cases of content exclusion made by Facebook in 2016. Both refer to the exclusion of images that did not meet the platform’s standards and, therefore, were removed from circulation. How can these exclusions help us think about the algorithmic mediation interference in the circulation of content?

“Platformization” describes the process of overlapping the platform’s logic into social sectors, in a process of mutual molding (Van Dijck et al., 2018). In what concerns journalism, Facebook provides an infrastructure to professionals, promising solutions to the problems faced in the field, but requiring adjustments and counterparts in the practices and operational logics of institutions (Plantin et al., 2018).

Large digital platforms, such as the Big Five1, organize and make data and information available for the operation and viability of companies in various sectors (Van Dijck et al., 2018). In this process, they reach huge scales, making visible and even surpassing old infrastructures by reconfiguring and transforming business processes in this movement (Plantin et al., 2018). They merge with previous infrastructures and obligate companies to adjust their legal and productive structures to their operating logics.

Platforms provide software and hardware, as well as services that help encode social activities into computer architectures. According to Van Dijck (2013), “They process (meta)data through algorithms and formatted protocols before presenting their interpreted logic in the form of user-friendly interfaces with default settings that reflect the platform owner’s strategic choices” (p. 29).

By delegating several of the choices made in their environments to the algorithms, the platforms inaugurate a new logic of knowledge (Gillespie, 2018a). That is because the logic of algorithmic selection differs from editorial logic—performed by journalists. While journalistic choices are based on choices made by specialists, by professionals trained to make them, algorithmic selection “depends on the proceduralized choices of a machine, designed by human operators to automate some proxy of human judgment or unearth patterns across collected social traces” (Gillespie, 2018b, p. 117). And that is because “platforms are intricate, algorithmically managed visibility machines. . . . They grant and organize visibility, not just by policy but by design” (Gillespie, 2018b).

Therefore, discussing the implications of algorithmic mediation in journalistic mediation means discussing the implications of inserting the logic of platforms into the journalism sector. For a more operational definition of algorithms, we can refer to Introna (2016), who explains that algorithms express “the computational solution in terms of logical conditions (knowledge about the problem) and structures of control (strategies for solving the problem), leading to the following definition: algorithms = logic + control (Kowalski, 1979)” (p. 21).

It is worth mentioning that I start from the understanding that journalism is an important social institution for the development of democracy because it helps citizens to be informed about global and local events. Furthermore, I understand that the action of “mediating” (Latour, 2012) necessarily transforms the mediated content, taking mediation as synonymous with action. In other words, I believe that when an agent participates in a mediation process, it necessarily interferes, acts, and influences the process in question.

When we look at the process of journalistic information circulation on digital platforms, we see the insertion of several new layers of mediation, in addition to those carried out by journalists. The problem is that these layers are usually made invisible by platforms that insist on presenting themselves as “intermediaries” and facilitators, or in other words, agents that do not interfere in the processes. For Gillespie (2018a), that happens because the portrayal of an algorithm as impartial “certifies it as a reliable socio-technical actor that gives relevance and credibility to its results and maintains the apparent neutrality of the provider in the face of the millions of evaluations it makes” (p. 107).

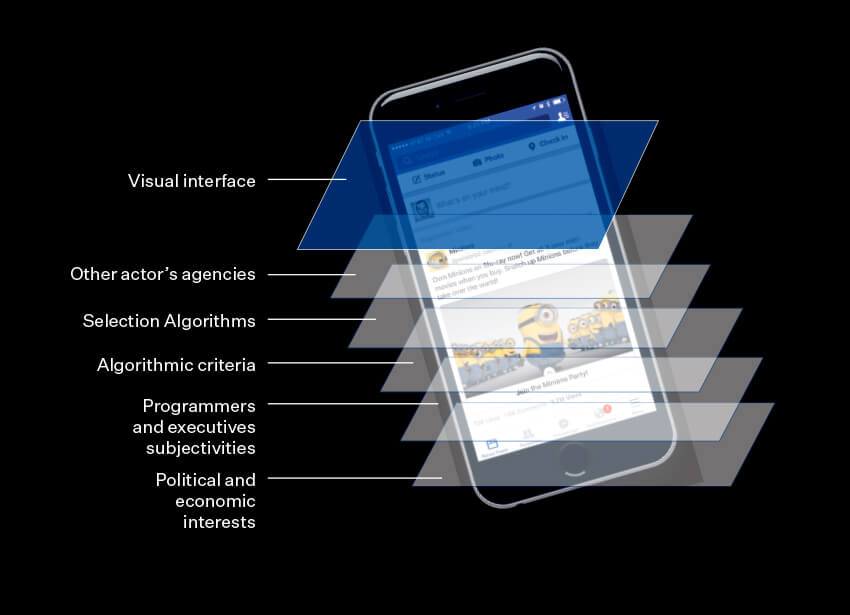

In Figure 1 we exemplify some of the various layers of mediation that influence access to information on digital media platforms. In this case, we see the Facebook interface—the user newsfeed—as accessed through a smartphone.

Example of mediation layers on Facebook access.

Source: Amanda Jurno

However, like any mediation, those carried out by platforms also influence its processes. For example, we cannot ignore the human dimension of algorithms and digital platforms (Diakopoulos, 2014), because the very delimitation of their basic criteria, an essential step for their functioning, implies a limited vision of meanings, and reproduces the subjectivities of the humans who created them. According to O’Neil (2017), mathematical models like the Facebook algorithms are never objective and always carry the subjectivity and biases of their programmers: “Models are opinions embedded in mathematics. . . . In each case, we must ask not only who designed the model but also what that person or company is trying to accomplish” (p. 21).

And what does Facebook intend when it sets the criteria for the newsfeed algorithms? According to the platform, newsfeed seeks to “present the most important stories to you every time you open Facebook,”2 but does not explain what it considers “important” or how it is done. According to official information, the posts are valued based on a series of criteria, such as publication date, proximity between who posted and who will read, engagement, type of media, among others (Jurno, 2016). After being valued, they are distributed and organized to be displayed to users. But, as every selection implies an exclusion, if some posts are chosen to be displayed, it means that several others are not.

The chosen posts are intended to keep users on the platform for longer as the Facebook business model is based on selling custom ads. Therefore, the more time users spend on the platform, the more they will be exposed to ads, and the more data that can be used to optimize the customization of those ads will be generated. And that’s why the platform is so concerned with showing users what they want to see—so they don’t leave it.

But despite being a platform present in people’s lives for some time, there are still a large number of users who claim not to know how it works: most of them, according to research conducted by the Pew Research Center (Smith, 2018). Overall, 53% of Facebook users say they “don’t know anything” or “not much” about how posts are included in their newsfeeds, and only 14% say they know “a lot.” Although the percentage is higher among older users, 41% of 18-29-year-olds and 50% of 30-49-year-old users say they don’t understand how this selection works. In other words, it is not a generational problem.

Ignorance about how Facebook operates and the criteria for (in)visibility of content becomes an issue when users access the platform to learn about news and journalistic content. If this journalistic content that is displayed to users is directly influenced by the platform’s operating logic and its algorithms, it necessarily means that the content that the algorithms do not select to be displayed will end up becoming invisible. And what are the implications of this invisibility?

Editing history, and the invisibility of the invisible

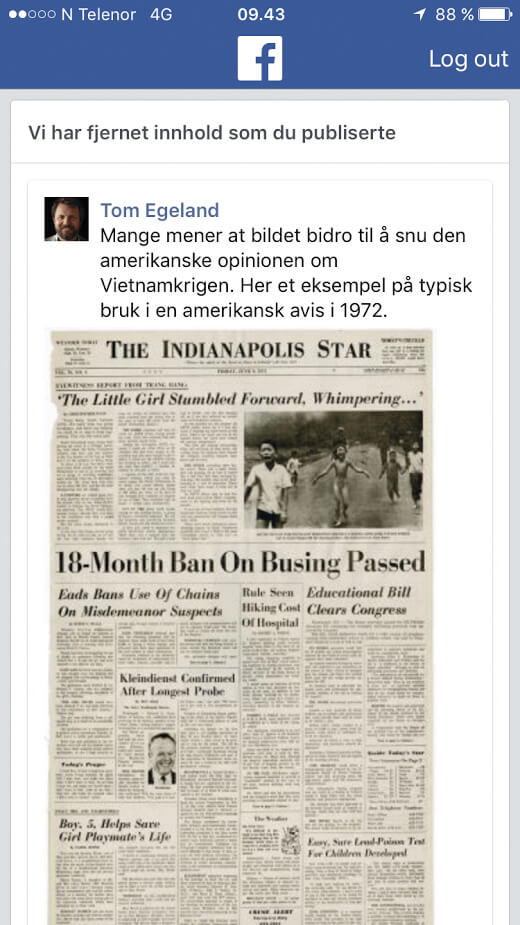

In August 2016, Norwegian writer and journalist Tom Egeland posted a set of war images on his Facebook profile to discuss the use of iconic photographs in journalism, specifically strong images in contexts of war and conflict that played an important role in the unfolding of historical events (Egeland, 2019). Egeland posted seven images, but the next day he realized that one of them had been deleted by Facebook: the famous photo The Terror of War. According to the platform’s message, the photo had been deleted because it violated Facebook’s policies on nudity, sexual content, and child pornography.

The deleted photograph, also known as Napalm’s Girl, was taken by Nick Ut in the Vietnam War in 1972. It portrays a group of children fleeing a bomb attack, and this is why the image played a central role at the time in raising awareness among the U.S.’ civilian population about the horrors of the war taking place in that country and about which the government kept confidentiality.

The photograph The Terror of War.

Source: Nick Ut

Egeland said he was shocked and surprised by the exclusion, because “The image itself is iconic and world-famous and there is no sexuality in it. It’s just a picture of a war victim, so if that’s an example of nudity, it’s very stupid” (Koren & Wallenius, 2016). But the image continued to be excluded every time it was published, including when printed on the cover of a 1970s newspaper.

Tom Egeland’s screenshot with the information that his publication had been removed.

As Tom Egeland is a well-known Norwegian author and journalist, the case quickly gained space in local media. The exclusion generated a national wave of protests, and several professionals, news media, and users also published the image in their profiles. They all had the content deleted and some were banned from the platform. Espen Hansen, head-editor of a major Norwegian newspaper, Aftenposten, said exclusion was not about “nudity” but about freedom of expression, history, and war (Stenerud, 2016).

Reacting to the exclusion, the newspaper published an open letter to the Facebook CEO, where Hansen (2016) said Zuckerberg was “the most powerful editor in the world” and was abusing his power and undermining the creation of a democratic society. In an interview with The Guardian, a Facebook spokesman said, “While we recognize that this photo is iconic, it is difficult to create a distinction between allowing a photograph of a naked child in one instance and not in others” (Hansen, 2016). The repercussions of the case even involved Norwegian Prime Minister Erna Solberg, for whom the platform’s attitude was “highly regrettable” and the removal of the images meant “editing our common history” (Wong, 2016).

This exclusion leads us to think that in a world where Facebook is a source of information, such an image would never have circulated widely and would never have played the role it did in the U.S. anti-war movement in the 1970s. In September, twenty days after the controversy began, the platform decided to reconsider the case and allow the image to circulate. Via a spokesperson, they said that after listening to the “community” they recognized “the history and global importance of this image in documenting a particular moment in time” (Heath, 2016). For Solberg (2016), “to delete posts containing such images is to limit freedom of expression, democracy, the right to criticize and question, and to see past events as they really were, not as they were considered by a giant corporation.”.

None of the explanations given by the platform brought information about the selection of the contents or the definition of the algorithm’s criteria. In response to the minister, Facebook chief operating officer Sheryl Sandberg reiterated the importance of keeping nudity off the platform and described such decisions as “difficult”: “We don’t always get it right. Even with clear standards, filtering millions of posts each week on a case-by-case basis is a challenge” (Solberg, 2016). Peter Münster, communications manager for Facebook in the Nordic countries, said, “It’s important to remember that Facebook is a global community and we should have a set of rules that apply to any cultural standard. That is not perfect, but it is difficult to do it differently when managing a global community” (Reuters, 2016).

But what is this “global community” that Facebook refers to? Is it really global or just a reflection of thoughts from the U.S.? Or is it a reflection of a very specific group of people who live in Silicon Valley and share the same values? And what does it mean to use these as a basis for selecting such specific localized worldview standards to filter content globally?

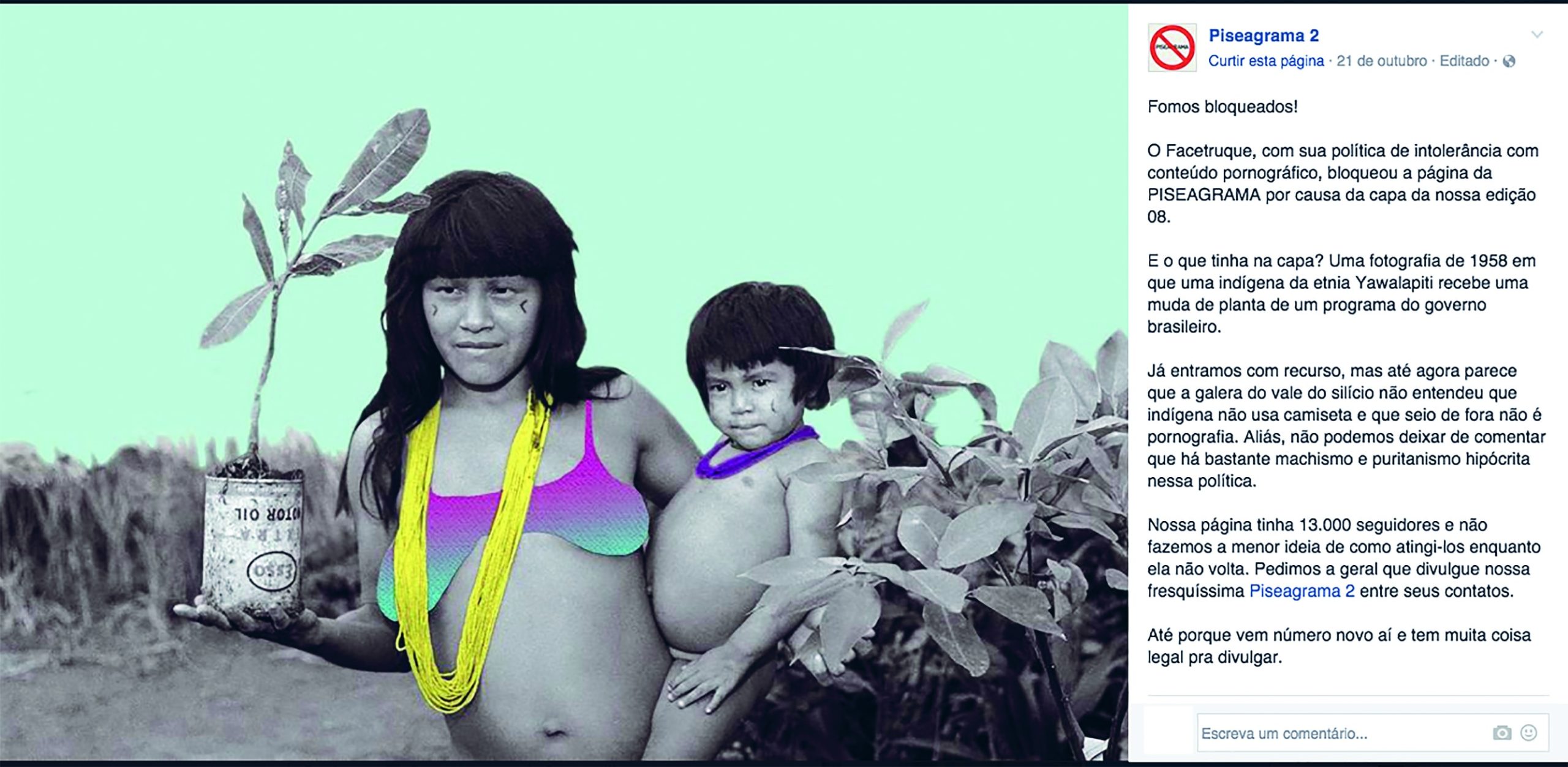

The second example happened in October 2016, when Facebook deleted a post from Piseagrama magazine, which contained a picture of an Amazonian Yawalapiti native with bare breasts. The photograph was taken in 1958 during a reforestation campaign conducted by the Brazilian military government and served as an illustration for the cover story of the magazine’s new edition. However, as the platform does not allow the publication of female nipples (except in cases of breastfeeding and after many controversies), this image was deleted. Not only the image, but also the magazine’s page on Facebook was deleted, causing it to lose its content and its network of readers and followers. After the creation of a new page, the editors republished the photo by placing a bra on the Indigenous woman.

Reposted photo of Indigenous woman with bra digitally added to meet Facebook content moderation standards.

Restricted to the page’s followers, the repercussion was not enough to reverse the censorship and the platform never responded to the administrators. In the post in which the photo was republished, the editors of Piseagrama commented on the exclusion: “It seems that Silicon Valley folks did not understand that Indigenous people do not wear T-shirts and those bare breasts are not pornography. In fact, we cannot but comment that there is a lot of sexism and hypocritical puritanism in this policy” (Piseagrama 2, 2016).

It is worth mentioning that this was not the first time that Facebook made images of Indigenous people invisible. In April 2015, for example, the Ministry of Culture was censored by the platform because of a photograph taken in 1909 by Walter Garbe. The image, which depicted a couple of Botocudos Indigenous people—both with bare breasts—had been published on Indigenous People Day to publicize the launch of Portal Brasiliana Fotográfica [Brasiliana Photo Portal]. At the time, the then Minister of Culture, Juca Ferreira, said the Facebook blockade showed how the platform “tries to impose its own moral standards on Brazil, and on the other nations of the world where the company operates, acting illegally and arbitrarily. . . . Facebook and other global companies operate in a logic very close to that of colonial times.” After the image was unblocked, Facebook said “it is not easy to find the ideal balance between allowing people to express themselves creatively and maintaining a comfortable experience for our global and culturally diverse community” (Zero Hora, 2015).

These two examples show us how algorithmic mediation influences the circulation of journalistic content. However, one of the images remained invisible to Facebook users. As the algorithmic logic determines what users see (or do not see), content that does not meet the platform’s criteria is simply excluded (or made invisible) even if it means editing global history or making individuals who are already largely invisible in our society unseen.

The difficult task of editing

At several points of time, Facebook uses the argument that it’s “hard” to edit and define which images may or may not circulate on the platform. We know it is not easy. Even the decision to publish Nick Ut’s photo was difficult in the 1970s because it is a strong image that violates the privacy rights of the children portrayed. However, based on journalistic professional standards and values, taking into account the various nuances and singularities of the image and the historical moment, subjective and circumstantial issues, the editors and journalists decided that the publication of the photograph was important and decided to do it with all the risks involved.

The journalist’s profession is exercised based on a series of norms and standards, shared by the group of people who recognize themselves as professionals, and which aim to prepare the professional to make “difficult” decisions like these. But when a platform, such as Facebook, presents itself as the infrastructure for the circulation of journalistic content, it adds several layers of intervention to the mediation of professionals trained to perform content selection. In this process, regardless of the choice of editors and journalists, it is algorithmic mediation that truly defines whether the image will circulate or not.

As we have discussed before, the criteria on which algorithms are based to make their decisions aim to increase the time spent by the user on the platform, often taking advantage of the capacity of such content to generate engagement. Worryingly, these are also the criteria that favor, or even encourage, the circulation of misinformation sensationalist content that generate strong emotions in people (Wardle, 2017). Moreover, we must remember that the nature of algorithms makes it impossible for them to deal with singularities and particularities that are as important in journalistic selection as those presented in this study.

We know that Facebook is an important source of information around the world. And even if people may not always use Facebook to find news, they end up using other platforms that also use algorithmic selection for its functioning, such as Google, Instagram, and Twitter, among others.

So, how can we deal with this situation? How can we, people interested in understanding and building better technologies, think of a way in which algorithmic selection is not so harmful to the circulation of reliable news and information? With the increasing amount of content circulating online, day by day, it is impossible to think of a solution that does not include algorithmic selection. If this logic of selection is contrary to the values of journalism, and if the basis of algorithmic selection is to categorize and standardize content, how can we build new technologies that can bring together these two different logics and encompass other singularities that escape both? Or, how can we fabricate technologies that do not harm public access to reliable sources of news and information, since algorithms inherently do not deal with subjectivity, and journalistic and historical content—and the “social” and “human”—are full of subjectivity?

1 Big Five is a term used in reference to the group formed by the largest and most important digital platforms in the Western world—Google, Amazon, Facebook, Microsoft and Apple (Van Dijck et al., 2018).

2 See “News Feed,” https://www.facebook.com/formedia/solutions/news-feed. Retrieved May 5, 2020.

REFERENCES

DIAKOPOULOS, Nicholas. Algorithmic accountability: Journalistic investigation of computational power structures. Digital Journalism, no. 3, pp. 398-415, 2015.

EGELAND, Tom. Email. Comunicação pessoal. Fevereiro de 2019.

GILLESPIE, Tarleton. A relevância dos algoritmos. Tradução de Amanda Jurno. Parágrafo. São Paulo, v. 6, n. 1, pp. 95-121, jan./abr. 2018a.

GILLESPIE, Tarleton. Custodians of the Internet: Platforms, content moderation, and the hidden decisions that shape social media. New Haven: Yale University Press, 2018b

HANSEN, Espen Egil. Dear Mark. I am writing this to inform you that I shall not comply with your requirement to remove this picture. In: Aftenpost. 08 set. 2016. Disponível em: https://www.aftenposten.no/meninger/kommentar/i/G892Q/Dear-Mark-I-am-writing-this-to-inform-you-that-I-shall-not-comply-with-your-requirement-to-remove-this-picture. Acesso em: 09 fev. 2019.

HEATH, Alex. Facebook decides to lift its ban on a controversial Vietnam War photograph. In: Business Insider. 09 set. 2016. Disponível em: https://www.businessinsider.com.au/facebook-lifts-ban-on-napalm-girl-vietnam-war-photo-2016-9. Acesso em: 09 fev. 2019.

INTRONA, Lucas D. Algorithms, governance, and governmentality on governing academic writing. Science, Technology & Human Values, 2016.

JURNO, Amanda Chevtchouk. Agenciamentos coletivos e textualidades em rede no Facebook: uma exploração cartográfica. 2016. (Dissertação – Mestrado em Comunicação). Universidade Federal de Minas Gerais. Belo Horizonte, 2016.

KOREN, Beate A.; WALLENIUS, Hege. Facebook fjernet foto Tom Egeland la ut av krigsoffer. In: VG. 20 ago. 2018. Disponível em: https://www.vg.no/nyheter/i/62R6e/facebook-fjernet-foto-tom-egeland-la-ut-av-krigsoffer. Acesso em: 09 fev. 2019.

LATOUR, Bruno. Reagregando o social: Uma introdução à teoria do ator-rede. Salvador: Edufba, 2012.

O’NEIL, Cathy. Weapons of math destruction: How big data increases inequality and threatens democracy. Broadway Books, 2017.

PISEAGRAMA 2. 21 out. 2016. Post. In: Facebook. Disponível em: https://www.facebook.com/revistapiseagrama/photos/ 1836470833263129. Acesso em: 23 out. 2016.

PLANTIN, Jean-Christophe; et al. Infrastructure studies meet platform studies in the age of Google and Facebook. New Media & Society. 20, no. 1, 2018, pp. 293-310.

REUTERS. Países da União Europeia aprovam leis mais duras contra Google e Facebook. In: O Globo. 15 abr. 2019b. Disponível em: https://oglobo.globo.com/economia/paises-da-uniao-europeia-aprovam-leis-mais-duras-contra-google-facebook-23600649. Acesso em: 19 jul. 2019.

SMITH, Aaron. Many Facebook users don’t understand how the site’s news feed works. In: Pew Research Center. 05 set. 2018. Disponível em: https://www.pewresearch.org/fact-tank/2018/09/05/many-facebook-users-dont-understand-how-the-sites-news-feed-works/. Acesso em: 29 nov. 2020.

SOLBERG, Erna. Facebook had no right to edit history. In: The Guardian. 09 set. 2016. Disponível em: https://www.theguardian.com/commentisfree/2016/sep/09/facebook-napalm-vietnamese-deleted-norway. Acesso em: 09 fev. 2019.

STENERUD, David. The girl in the picture saddened by Facebook’s focus on nudity. In: Dagsavisen. 02 set. 2016. Disponível em: https://www.dagsavisen.no/verden/the-girl-in-the-picture-saddened-by-facebook-s-focus-on-nudity-1.773232. Acesso em: 09 fev. 2019.

VAN DIJCK, José; POELL, Thomas; DE WAAL, Martijn. The platform society: Public values in a connective world. Oxford: Oxford University Press, 2018.

WARDLE, Claire. Fake news. It’s complicated. In: First Draft – Medium. 16 fev. 2017. Disponível em: https://medium.com/1st-draft/fake-news-its-complicated-d0f773766c79. Acesso em: 10 dez. 2019.

WONG, Julia Carrie. Mark Zuckerberg accused of abusing power after Facebook deletes ‘napalm girl’ post. In: The Guardian. 09 set. 2016. Disponível em: https://www.theguardian.com/technology/2016/sep/08/facebook-mark-zuckerberg-napalm-girl-photo-vietnam-war. Acesso em: 09 fev. 2019.

ZEROHORA. 18 abr 2015. Após Ministério da Cultura ameaçar processo, Facebook republica foto censurada. Fotografia de índia com seios expostos foi retirada da rede social. In: Zero Hora. Disponível em: http://zh.clicrbs.com.br/rs/noticias/noticia/2015/04/apos-ministerio-da-cultura-ameacar-processo-facebook-republica-foto-censurada-4743029.html. Acesso em: 27 jun. 2017.