EN PT/BR

Alternative ways of algorithmic seeing-understanding

How can we think differently about algorithmic ways of seeing-understanding? In a world where algorithms of seeing-understanding are becoming surveillance-military tools that mostly don’t do us any good (except perhaps for some Silicon Valley companies and military superpowers) how can we, even if just a little, break from it? I write this text as a provocation1, trying to find a way through these questions that continue to bother me. . . but also to try to understand how it is possible to relate to them, and perhaps use them to guide my practice.

In order to (try to) “think differently,” I want to start by breaking down the language in these questions. What I term seeing-understanding is an initial attempt to provocatively de-language the often-hyped field of “Computer Vision” (yes, capitalized).

Since 2009, with the creation of a large database called ImageNet, the use of machine learning to create Computer Vision algorithms has sky-rocketed, with results that have gathered much academic, industrial, and public interest. ImageNet was created by Fei-Fei Li, a professor at Stanford University, and her team. What they created, broadly speaking, was a visual encyclopedia of the world of objects, where each “thing” in the taxonomy was represented by a collection of images (see Crawford & Paglen, 2019, for a critical discussion). The project used the labor of Amazon Mechanical Turk workers, extremely poorly paid global platform work, to label the images. That database then became the fuel to create new algorithmic types of analysis by becoming a default training dataset, leading to a huge growth of Computer Vision applications.

Years later, in 2015, Fei-Fei Li presented a widely popular TED Talk presenting her vision for ImageNet (Li, 2015). (I love these TED Talks, particularly due to their cheesy attempt in creating a compelling and emotional narrative behind a topic.) During the fifteen minutes of her well-composed story, she laid out much of the motivations and worldviews behind machine learning-

-enabled Computer Vision. Her crucial point was that cameras can take pictures, but they cannot really understand what is actually happening in them. They can only see the world as pixels, or as Li called them, “lifeless numbers.” The images do not carry meaning within themselves. As “to hear is not the same as to listen,” taking pictures is not the same thing as seeing.

Seeing, Fei-Fei Li said, is actually understanding. What ImageNet allegedly makes possible for current Computer Vision algorithms to do is making sense of what is in images by drawing on patterns created through analysis of huge swaths of labeled data. As she further explained, “vision starts in the eyes but, in fact, it actually happens in the brain.” Understanding the world, a brainy cognitive action, means using previous experiences to make sense of the world. By gathering more and more data, she argued, the training of computers would allow algorithms to see-

-understand like us, humans.

I argue that acknowledging Computer Vision as a process of seeing-understanding can help us in the process of critically rethinking it beyond the current hegemonic perspectives. It serves to underline the fact that this is not just about seeing/sensing the world. Computer Vision is in fact rooted in a much deeper, complex epistemological process of making sense of the world, making choices about what to see, what to prioritize, how to understand what is there. It reminds us that seeing is an active process, as already had been described by the painter Henri Matisse in 1954: “To see is itself a creative operation, requiring an effort” (Matisse, 1954). There are many ways of seeing, as well as many ways of understanding, and these matter.

I want to use a case from Brazil to elaborate on how technology-enabled seeing-understanding is such a complex and layered action. Monteiro and Rajão (2017) studied how remote sensing plays a role in detecting and tracking deforestation in the Amazon rainforest. Although there is plenty of satellite data being gathered, all of it needs to go through the scrutiny of scientists/analysts who outline the deforested areas in the images. The satellite data, although it may seem really complete and detailed, as it comes from expensive and precise instruments of seeing, doesn’t tell the full story in itself. The scientists/analysts need to do a lot of work on it to make it useful: stitch together multiple datasets, collaborate across fields and locations, add in their qualitative fieldsite knowledge, etc. These specialists interpret what they see through the “technological” lens of the satellite to determine, both as scientists and concerned citizens, whether what they are seeing is deforestation or just a cloud passing by. And more, they have to somehow balance their interpretation to make sure it won’t be misunderstood by society and policymakers, who may see the final results as exaggerated or inaccurate 2.

Looking at satellite images, the scientists/analysts are trying to understand and make sense of the world, interpreting through many layers of analysis, situated knowledge, and embodiment. Their decisions of what to see have direct effects in the world, which also informs their particular ways of seeing-understanding. In other words, the information infrastructures for monitoring forest loss operate “at the intersection of mutually interfering political demands, incommensurable goals, and competing organisational logics” (Vurdubakis & Rajão, 2020, p. 18). Although “the Amazonian rainforest remains the most closely observed in the world” (Vurdubakis & Rajão, 2020, p. 18), what is made visible depends on the sociotechnical “unstable network formations” of geospatial technologies and humans.

This discussion is, of course, not really new. Many scholars, particularly historians and theoreticians of vision and Science, Technology, and Society (STS) have discussed what is behind the effort of vision. Berger (2008), for example, talked about “ways of seeing,” saying that the process of seeing paintings, as well as the process of seeing anything, is much less spontaneous and natural than we tend to believe—it depends in large part on habits and conventions. There are many more who discuss this, such as Crary (1992), for whom the body is an intrinsic part of our ways of seeing; Parks (2005), for whom the satellite, much like previously described, can work as a way of seeing that hides/shows in particular ways; and Impett (2020), for whom different computer vision models can see artistic production differently. The fact is: neither in the artistic paintings, nor in the satellite views of the world, or even in the algorithms of computer vision is there a “view from nowhere” (Haraway, 1988), a distant, removed way of seeing that is not also tied to a particular way of understanding. Seeing–understanding is an ideological process, and whenever technology is involved, there are many more layers to be considered.

So, with that established, we can jump to Computer Vision today. The most prevalent and powerful algorithms of seeing–understanding in our society have strong links to consumer surveillance and the military. Or, as described by artist and researcher Adam Harvey, “The most powerful computer vision surveillance tools developed today come from Facebook, Intel, IBM, and Google and are primarily used for tracking consumers” (Harvey, 2020). In the logic of “data capitalism” (West, 2017), companies are using their enormous collected data to create automated personalized predictions of behavior at scale: “increasingly targeted on individual actions, sentiments, and desires rather than stratified population aggregates” (Mackenzie, 2013, p. 392). Alongside the purposeful erosion of privacy in the “invisible visual culture” of machine-seeing, military technology uses are of particular concern. From missile guidance to drone operation, companies and states work together to create dangerous and lethal seeing-machines that “surveil and punish,” often automatically.

The story goes that “If the only tool you have is a hammer, you may be tempted to see everything like a nail.” The way the hegemonic algorithms of seeing-understanding work is directly tied to the surveillance and military gaze, and their economic, racial, social ways of seeing-sensing-detecting and understanding-sensemaking-comprehending. So, just as Katherine Ye in the first chapter of this publication discusses how Silicon Valley creates tools for certain people and by certain people, in the same way these algorithms of seeing-understanding are seeing and understanding for some people, and some people/things are being seen and understood. This, which may seem obvious, is in fact a very active exercise of power that we need to center in our attempt to think about alternatives.

Algorithms of seeing-understanding, as much as any other algorithms, are not black boxes, even if they are undoubtedly clouded by many layers of corporate secrecy and technical languages. Their ways of seeing-understanding, and not only the uses they are being put to, are being directly shaped by particular visions and imaginaries of what seeing-understanding through machines is and can do. This relates to Louise Amoore’s calls for focusing on the opacity of algorithms instead of trying to make them “transparent” or “ethical” (Amoore, 2020). She says: “Where the political demands for transparency reassert the objective vision of a disembodied observer, to stay with opacity is to insist on the situated, embodied, and partial perspective of all forms of scientific knowledge.” Amoore is pointing exactly to the need to foreground how understanding is happening and what it is privileging, rather than trying to somehow believe we will ever reach an imagined algorithmic objectivity. Although I may agree with Fei-Fei Li that what really matters is understanding the images, I argue that there are many different ways of seeing-understanding that can be considered or privileged, and that this is crucial for breaking from problematic hegemonic perspectives.

Thus, we can tentatively conclude that our ways of finding alternatives could/should focus both on: 1) Experimenting with ways of seeing and understanding that are different from the imperatives of military-surveillance Computer Vision; and 2) Breaking away from the power dynamics that mire current Computer Vision algorithms, inverting or subverting power relations.

How could this look in practice? I have three suggestions to help us start imagining and conjecturing alternatives. These are (once again) not comprehensive, but provocations for further discussion.

1) The need for a disobedient gaze. How can algorithms turn around what/who is seen, and focus instead on surveilling the most powerful, as opposed to those marginalized?

One of the projects that I would say advances in this direction is Forensic Architecture, from the U.K. Their objective is using the large amount of data generated by surveillance/data capitalism in order to create different ways of seeing, a “counter-forensics” that surveils states, police, and the military. More related to Computer Vision, their project Triple-Chaser created an algorithm that captures images from the Internet and tries to detect what types of bombs are being used in certain regions of the world. Their goal is to pressure the companies that create these bombs and hold them responsible. In direct collaboration with this project is Harvey’s VFRAME, which more broadly offers open-source computer vision detection tools to help/support the work of activists and journalists that cover wars: “Facilitating visual testimony from conflict zones around the world by using computer vision to navigate large volumes of citizen and journalist collected data” (Harvey, 2020).

Frame from Forensic Architecture’s Triple-Chaser investigation.

Source: Forensic Architecture/Praxis Films, full video available at:

https://forensic-architecture.org/investigation/triple-chaser

VFRAME’s website (https://vframe.io/).

Source: VFRAME https://vframe.io/

The idea of a disobedient gaze is also present in the project OpenOversight (2020), which offers “a public, searchable database of law enforcement officers.” In a country like the U.S., where police brutality is a severe problem affecting especially marginalized communities, the project seeks to allow citizens to “identify an officer with whom [they] have had a negative interaction.” Eileen McFarland’s talk “Watch the Watcher: Facial-Recognition & Police Oversight” discusses how facial recognition was used by the project to preprocess the data and serve as a support to human photo sorting (McFarland, 2019). Facial recognition is already being used by police officers to identify citizens, with consequences that are often terrible (e.g. identifying and punishing activists). So instead of being the police using algorithms to make identifying people easier, the project instead gives the citizens the power to investigate and identify police officers that may have committed violent acts toward citizens.

2) The importance of facing errors, glitches, and inefficiencies of these systems both as a sign of their limitations and as a way to think otherwise. Computer Vision algorithms, as any algorithms or statistical models, are intrinsically fallible, and should be seen as the biased and inaccurate tools they are. Thus, we need to use this to think about how we could foreground its inefficiency and use that to segue into alternatives.

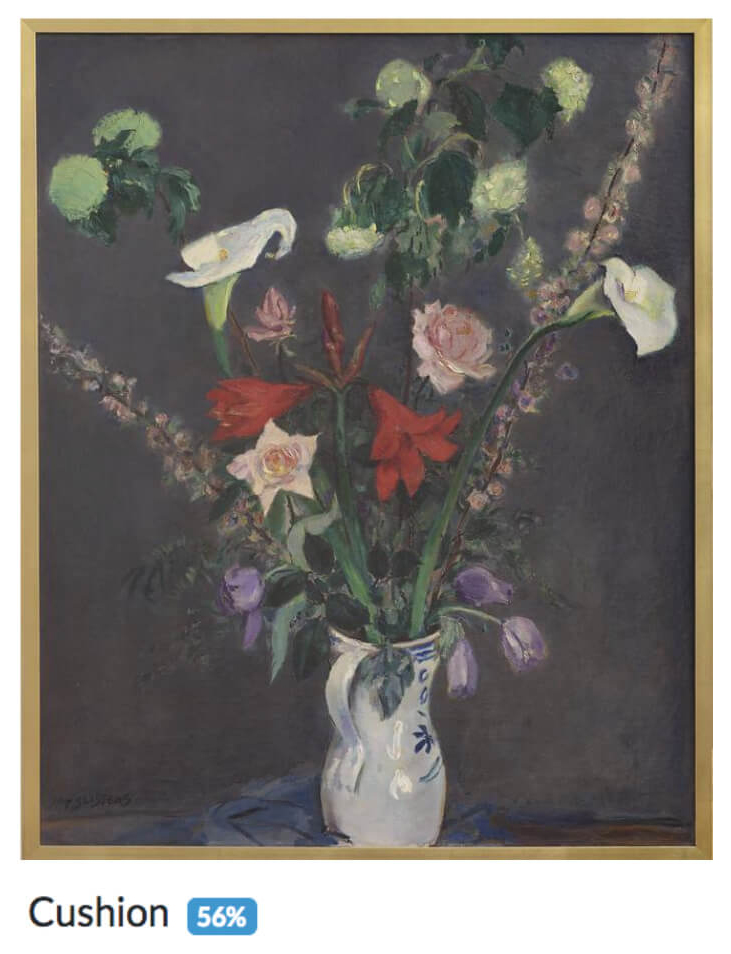

Together with researcher and artist Bruno Moreschi, we have created the Recoding Art project, in which we used commercial Computer Vision from Google, Amazon, IBM, and other companies to try to see-understand works of art from the collection of the Van Abbemuseum, in Eindhoven, Netherlands. As can be seen in Figures 3 and 4, colorful paintings tend to be read as cushions, which shows how the machinic gaze has been particularly trained to see the world in a commercial way, as products. It also reminds us, on the other hand, how the visual content of works of art goes beyond the confines of the museum and has become part of popular household products. It also relates to museum shops and their practices of transforming images of artworks into souvenirs. Once these results are conveyed to the public they open up the museum, question the work of art’s normative interpretations, and invite the public to experiment with other ways of seeing: What if George Braques’ painting is, indeed, a cushion? The work aims to critique Computer Vision’s normative ways of seeing, while also indicating they can be used in ways that go against their intentions, resulting in creative potentials (Pereira & Moreschi, 2020).

La Roche-guyon (1909), by Georges Braque,

read by Computer Vision as cushion and home decor.

Source: Bruno Moreschi & Gabriel Pereira

Vaas met Bloemen (1929), by Jan Sluijters,

read by Computer Vision as cushion.

Source: Bruno Moreschi & Gabriel Pereira

3) More than ever, considering: What should we refuse to build/create/deploy? This means a practice that focuses on speculating and/or acting to disassemble the futures we don’t want. This can help us in the process of thinking otherwise as it highlights the consequences of dangerous models, and indicates we have agency over creating technology (rather than it having agency in itself).

In the case of facial recognition, we have seen strong movements around the world that question its core bases. Fight for the Future, a group of artists, engineers, activists, and technologists in the U.S., have led a cross-institutional movement to ban facial recognition due to its ineffective, unequal, and unjust character. It is crucial for us in Brazil also to consider this, especially when these algorithms are already being used across the country with terribly racist results: about 90% of arrests triggered by facial recognition systems were of people of color (Nunes, 2019).

Responses to facial recognition can take different shapes other than protesting. A creative critical response has been the many artistic projects to avoid facial recognition, such as Zach Blas’ Facial Weaponization Suite, which creates different amorphous masks from workshops and data aggregates of many faces (Blas, 2011). A different kind of response is direct rejection: worker movements such as Tech Won’t Build It represent workers’ refusal to construct tools for military and other nefarious uses. Salesforce employees, in a letter to their CEO, said: “We cannot cede responsibility for the use of the technology we create, particularly when we have reason to believe that it is being used to aid practices so irreconcilable to our values” (Kastrenakes, 2018). This retaking of control reverses the power structures, retaking responsibility for the creation of technology and saying we can do it in a different way from what is currently being done.

Frame from Zach Blas’ “Facial Weaponization Communiqué: Fag Face,” with one of the created masks.

Source: Zach Blas (CC BY-NC-ND 3.0), full video available at: https://vimeo.com/57882032

These are just some of the ways we can try to see-understand differently from current and hegemonic Computer Vision: looking disobediently/up, embracing errors/inefficiency, and openly rejecting what we don’t want. None of these directions, though, are solutions in themselves. The task of thinking otherwise is very complicated, as it requires to break from so much that has been intentionally made normal and unquestionable. It requires a lot of creativity and struggle as well.

Fei-Fei Li concluded her TED Talk by saying that “Little by little, we are giving sight to the machines. First, we teach them to see. Then, they help us to see better” (Li, 2015). My hope, instead, is that, little by little, we may expand how machines see-understand beyond the hegemonic imperatives of the surveillance-military uses, and instead be able to use them in a more creative-just-equitable-reflexive-embodied-(insert your word here), or. . . not use them at all (whenever that makes more sense). My ultimate hope is contributing to the refusal of technological determinism and techno-utopianism not only through critique, but also by offering (more-or-less-concrete) alternatives.

1 This text is an enhanced transcription of my presentation at the Affecting Technologies, Machining Intelligences (AT/ MI) event. Thanks to all the people who helped me think through these questions in many informal conversations, in particular Katherine Ye, Bruno Moreschi, Pablo Velasco, and Rodrigo Ochigame.

2 In a government that is against the idea that there is deforestation (such as the current far-right Bolsonaro government), the way to deal with scientific reports can change (and they have). The technological way of seeing the world through the satellite, even when the Amazon is actually being destroyed, can be changed to turn a blind eye to reality.

REFERENCES

AMOORE, L. (2020). Cloud Ethics: Algorithms and the Attributes of Ourselves and Others. Duke University Press.

BERGER, J. (2008). Ways of Seeing. Penguin UK.

BLAS, Z. (2011). Facial weaponization suite. https://zachblas.info/works/facial-weaponization-suite/

CRARY, J. (1992). Techniques of the Observer. MIT Press.

CRAWFORD, K., & Paglen, T. (2019). Excavating AI: The politics of training sets for machine learning. Retrieved from https://anatomyof.ai/

HARAWAY, D. (1988). Situated knowledges: The science question

in Feminism and the privilege of partial perspective. Feminist Studies, 14(3), 575. https://doi.org/10.2307/3178066

HARVEY, A. (2020). Building Archives for Evidence and Collective Resistance. Presented at Transmediale 2020, Berlin.

IMPETT, L. (2020). Early Modern Computer Vision. Presented at The Renaissance Society of America, Philadelphia. https://rsa.confex.com/rsa/2020/meetingapp.cgi/Paper/4424

KASTRENAKES, J. (2018). Salesforce employees ask CEO to ‘re-

-examine’ contract with border protection agency – The Verge. Retrieved 2020-08-07 from https://www.theverge.com/2018/6/25/ 17504154/salesforce-employee-letter-border-protection-ice-immigration-cbp

LI, F.-F. (2015). How we’re teaching computers to understand pictures. Retrieved 2020-08-07 from https://www.ted.com/talks/fei_fei_li_how_we_re_teaching_computers_to_understand_pictures

MACKENZIE, A. (2013). Programming subjects in the regime of anticipation: Software studies and subjectivity. Subjectivity, 6(4), 391-405. https://doi.org/10.1057/sub.2013.12

MATISSE, H. (1954). Looking at life with the eyes of a child. ArtReview. https://artreview.com/archive-matisse-looking-at-life-with-the-eyes-of-a-child-1954/

MCFARLAND, E. (2019). Watch the watcher: Facial-recognition & police oversight. Retrieved 2020-08-07 from https://www.youtube.com/watch?v=f0xwvXHqPbU

MONTEIRO, M., & Rajão, R. (2017). Scientists as citizens and knowers in the detection of deforestation in the Amazon. Social Studies of Science, 47(4), 466-484. https://journals.sagepub.com/doi/pdf/10.1177/0306312716679746

NUNES, P. (2019). Levantamento revela que 90,5% dos presos por monitoramento facial no Brasil são negros – The Intercept Brasil. Retrieved 2020-08-07 from https://theintercept.com/2019/11/21/presos-monitoramento-facial-brasil-negros/

OpenOversight. Retrieved 2020-08-07 from https://openoversight.lucyparsonslabs.com/

PARKS, L. (2005). Cultures in Orbit. Duke University Press.

PEREIRA, G., Moreschi, B. (2020). Artificial intelligence and institutional critique 2.0: Unexpected ways of seeing with Computer Vision. AI & Society.

VRAME: Computer Vision Tools For Human Rights Researchers. Retrieved 2020-08-07 from https://vframe.io/

VURDUBAKIS, T., & Rajão, R. (2020). Envisioning Amazonia: Geospatial technology, legality and the (dis)enchantments of infrastructure. Environment and Planning E: Nature and Space, 2514848619899788. https://journals.sagepub.com/doi/pdf/10.1177/2514848619899788

WEST, S. M. (2017). Data capitalism: Redefining the logics of surveillance and privacy. Business & Society, 58(1), 20-41. https://doi.org/10.1177/0007650317718185